Project Natick started in 2013 when a Microsoft employee who served on a US Navy submarine submitted an internal White Paper suggesting placing data centers in water. In late 2014, Microsoft formerly kicked off Project Natick, and quickly moved to a first sea trial (Natick Phase 1) in August 2015 with the objective to validate the concept of an undersea data center. The beginning of the second sea trial (Natick Phase 2) was announced on 5 June 2018 by Microsoft.

Faster Construction and Installation

The motivations for and expected benefits from such an underwater location are multi-fold. One basic driver is the ability, for content and cloud-based service providers, to quickly deploy capacity when and where it is needed. Unlike their terrestrial counterparts that must cope with local building codes, taxes, climate, workforce, regulation, electricity supply, and network connectivity that differ everywhere, an undersea data center can be manufactured in a standard way and shipped to be deployed in a subsea environment, which is almost uniform across the globe. As a result, shorter lead time for building and installation can be expected. In some way, this new data center concept can be compared to containerized cable landing stations, which are built in a central facility before being shipped to the landing sites for installation and connection to power and communication networks.

Deploying Undersea Data Center in the Right Locations

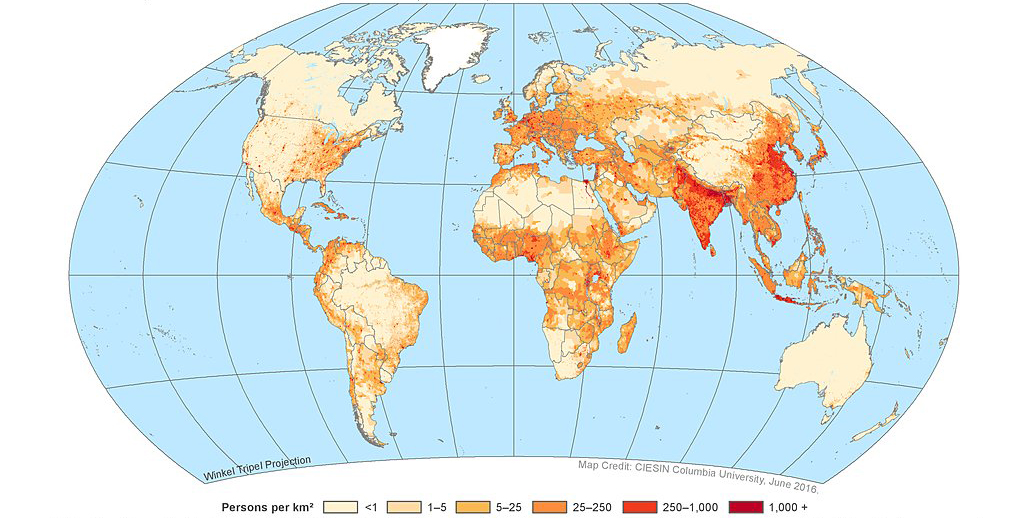

The undersea data center concept enables to deploy data storage capabilities where actually needed. The need can be more or less static (governed by population density) or dynamic in response to changing market conditions.

As illustrated by previous posts in this blog, many new data centers are built in locations where electricity is inexpensive, the climate is reasonably cool, and the land is cheap. The problem with this approach is that it often puts data centers far from population centers (which are mostly located in temperate regions), which limits how fast the servers can respond, depending on the applications, to requests. In addition to this, almost 50% of the world’s population lives within 200 kilometers of the sea. Consequently, deploying data centers just offshore near coastal cities would put them closer to capacity users than is the norm today.

Efficient Cooling

Another obvious benefit offered by undersea data center is the efficient cooling offered by a subsea environment. Terrestrial data center facilities have used mechanical cooling before moving to free-air cooling. This second approach tends to select high latitudes for building data centers, far from population centers (see the intense data center activity in the Nordic countries, as illustrated by some of our previous posts: here, here, here and there). An additional environmental drawback from land-based data centers is their water consumption. Using no external water at all for cooling, Natick undersea data center design leads by essence to a perfect WUE of 0 liter vs land data centers, which consume up to 4.8 liters of water per kilowatt-hour (Water Usage Effectiveness is the liters consumed per megawatt of power per minute; lower values are better, 0 is best).

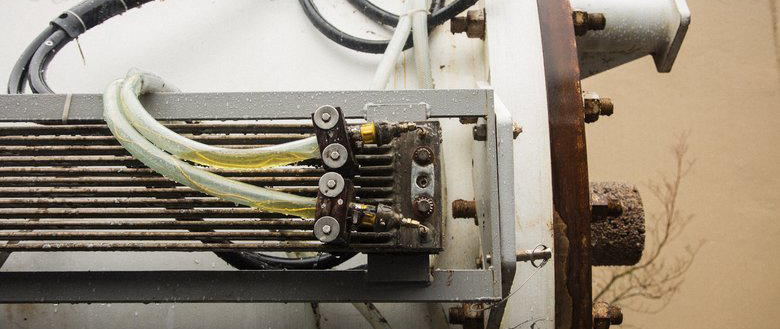

Microsoft’s Natick design consists of standard computer racks with attached heat exchangers, which transfer the heat from the air to, e.g., ordinary water. That water is then pumped to heat exchangers on the outside of the data center body, which in turn transfer the heat to the surrounding ocean (refer to the fourth picture below). The cooled transfer liquid then returns to the internal heat exchangers to repeat the cycle. At 200 and 500 meters’ water depth, the water remains typically below, respectively, 15 and 12 °C all year round.

Powering

Here, we can find another analogy between traditional data centers and subsea cable landing stations. In both cases, the primary power source is typically delivered by the power grid with, preferably, at least two physically-diverted paths. In case grid power fails, backup generators shall be included in the design, and banks of batteries as well, should the backup generators fail also. Moving to this undersea data center concept, an efficient implementation scheme would be to deploy undersea data centers near offshore sources of renewable energy, be it an offshore wind farm or some marine-based form of power generation that exploits the force of tides, waves, or currents. Doing so will avoid power transport over long distances and unnecessary voltage conversions.

Going to Sea for the First Time: Natick Phase 1

Natick Phase 1 was based on a small pod, housing a single rack of servers. The sea trial began in August 2015, with the data center pod submerged it at 11 meters’ depth in the Pacific near San Luis Obispo, California, USA, where the water temperatures ranged between 14 and 18 °C.

Over the course of this 105-day experiment, Microsoft team showed that it could keep the submerged computers at temperatures that were at least as cold as what mechanical cooling can achieve and with even lower energy overhead than the free-air approach – just 3 %. To the best of Microsoft engineers’ knowledge, this energy-overhead value is lower than any production or experimental data center.

The picture above (from Natick Phase 1 sea trial) shows the impact of biofouling or biological fouling upon any structure submerged in sea water: it is a simple law of life that if a device remains within the photic zone (i.e. the uppermost layer of ocean water that is exposed to intense sunlight), microorganisms, plants, algae, or animals will accumulate on wetted surfaces and latch onto the pod, reducing the effectiveness of heat transfer.

The engineers at Microsoft used a common technique in the maritime sector to sidestep this biofouling issue by using copper-nickel (Cu-Ni) alloys on the heat exchanger surfaces. Cu-Ni is a good heat exchanger and corrosion resistant, but more importantly it is also naturally resistant to biofouling as evidenced by the picture below taken when the undersea data center pod was retrieved after 105 days under water.

After more than three months under water, the result looked great, enabling efficient heat exchange for effective cooling of the servers inside the data center pod.

Going Again to Sea: Natick Phase 2

Natick Phase 2 is about going further in terms of dimensions (12 racks housed in Phase 2 pod vs 1 rack in Phase 1 pod) and length of the stay under water (one full year vs 105 days for Phase 1).

Project Natick Phase 2’s 40-foot (about 12 meter) long undersea data center is loaded with 12 racks containing a total of 864 servers and associated cooling system infrastructure. This payload contains 27.6 petabytes of disk; it is as powerful as several thousand high-end consumer PCs and has enough storage for about 5 million movies. This data center configuration requires just under a quarter of a megawatt of power when operating at full capacity. The data center pod was assembled and tested in France by Naval Group and shipped on a flatbed truck to Scotland where it was attached to a ballast-filled triangular base for deployment on the seabed.

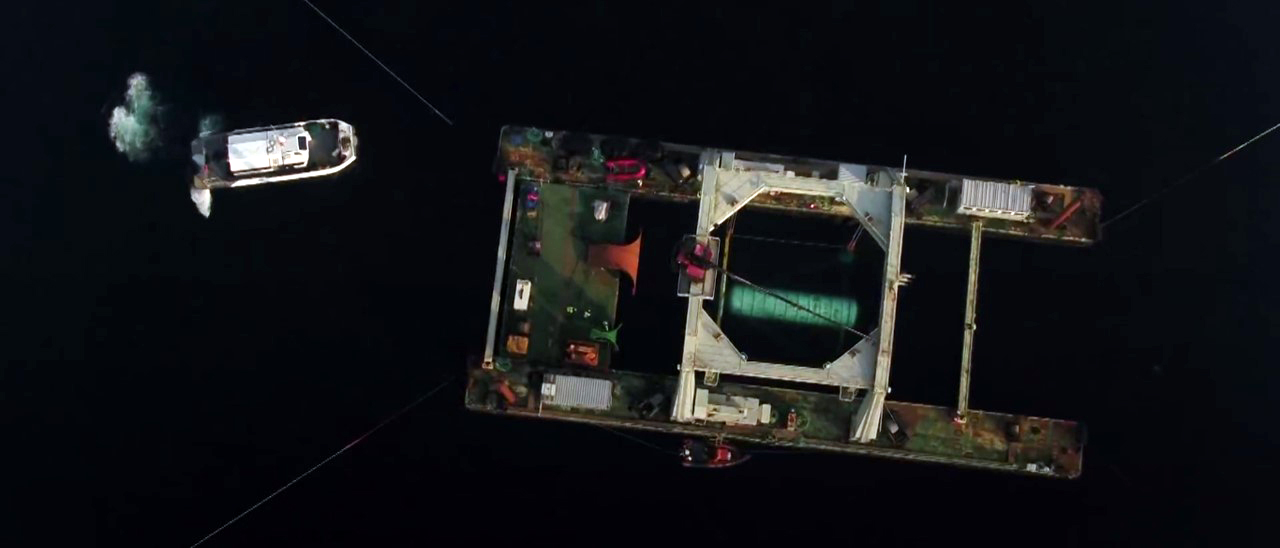

The undersea data center was towed out to sea partially submerged and cradled by winches and cranes between the pontoons of an industrial catamaran-like gantry barge. At the deployment site, at the European Marine Energy Centre in Scotland’s Orkney Islands, a remotely operated vehicle retrieved a cable containing the fiber optic and power wiring from the seafloor and brought it to the surface where it was checked and attached to the data center, and the data center powered on.

Using 10 winches, a crane, a gantry barge and a remotely operated vehicle, the data center pod was lowered meter by meter down to the sea bed, 35 meters below the surface. This second sea trial is planned to last one year. The length of operation without maintenance is planned to be up to 5 years.

Microsoft’s vision is for Natick data center deployments of up to 5 years, which is the anticipated lifespan of the computers contained within the vessel. After each 5-year deployment cycle, the data center pod would be retrieved, reloaded with new computers, and redeployed under water. The target lifespan of a Natick data center is at least 20 years. After that, the data center is designed to be retrieved and recycled.

Below is a series of screen shots from a few videos showing the deployment of Natick Phase 2 undersea data center pod on the sea bottom.

Natick Phase 2 is a step forward to assess whether it is possible to use the existing logistics supply chain to ship and rapidly deploy modular undersea data centers anywhere in the world, even in the roughest patches of sea.

Furthermore, the European Marine Energy Centre being a test site for experimental tidal turbines and wave energy converters that generate electricity from the movement of sea water, carrying out this sea trial in this place is a way to further demonstrate Microsoft’s vision of data centers with their own local sustainable power supply. The electrical power used for this sea trial is 100% locally produced renewable electricity from on-shore wind and solar, off-shore tide and wave.

For more information, you can visit Natick Project website.

For comments or questions, please contact us.